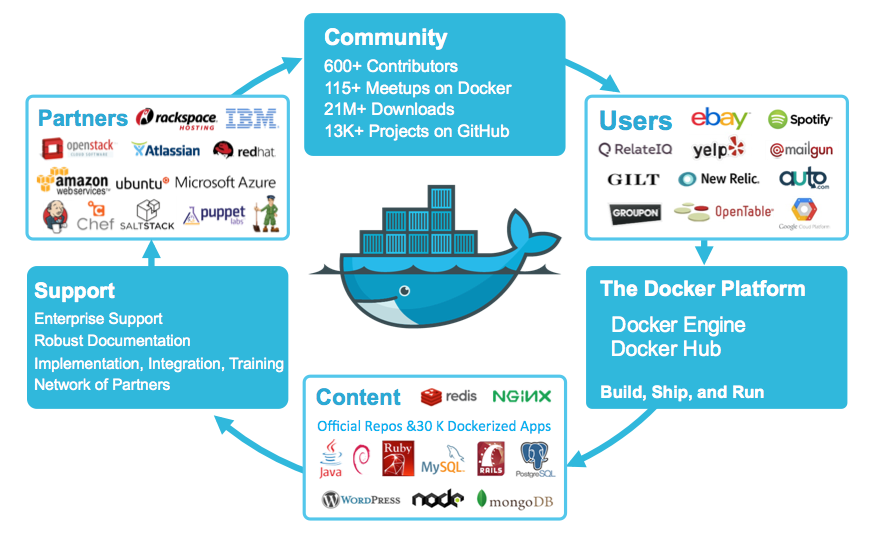

In today’s fast-paced software development landscape, containerisation has become crucial for streamlining development, testing, and deployment processes. Docker, in particular, has emerged as a popular containerisation platform offering numerous benefits for developers and operations teams. In this blog post, we will explore the basics of Docker, its adWe will learn about Docker, its benefits in software development, and how it differs from other containerization tools such as Podman.ips for using Docker efficiently and securely. Whether you’re new to Docker or looking to enhance your containerisation skills, this guide has you covered. Let’s unlock the power of Docker and take your software development to the next level!

Understanding Docker: The Basics and Benefits

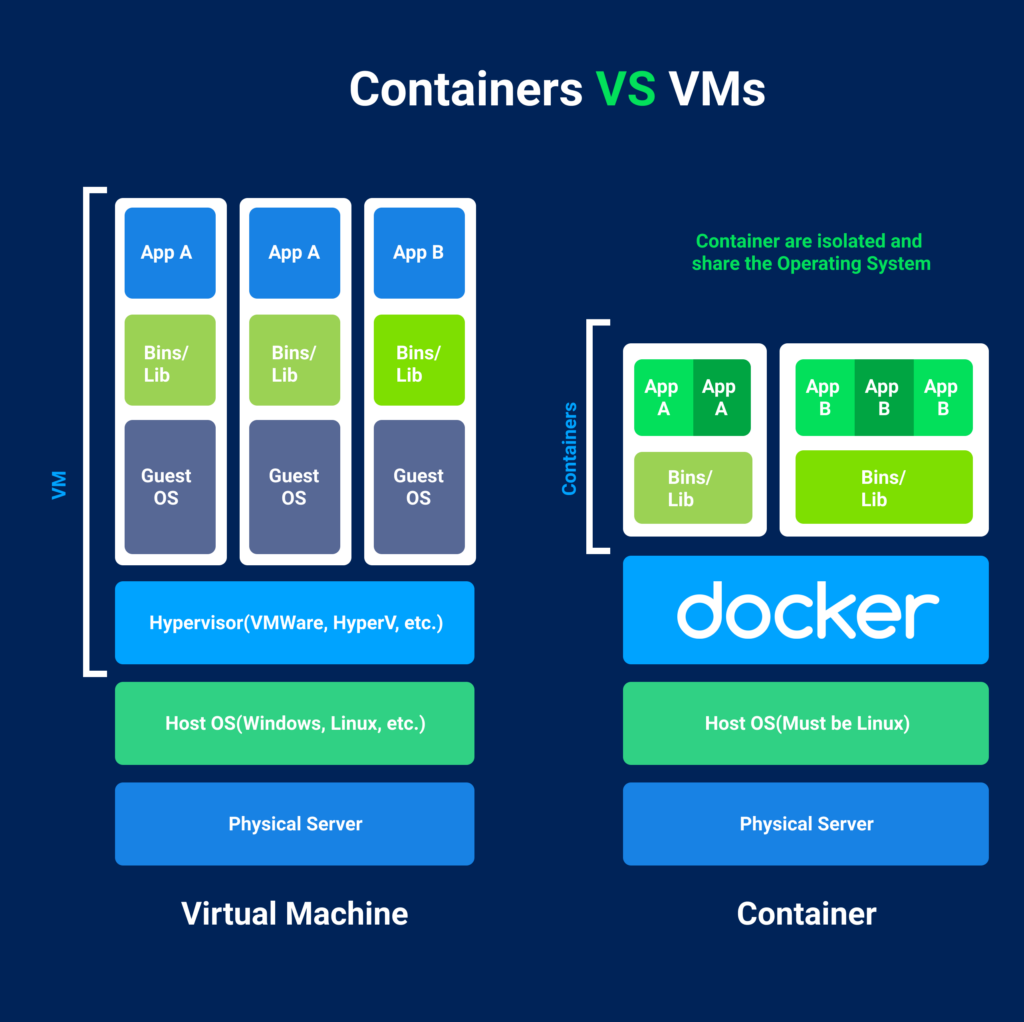

Docker is a powerful containerisation platform that allows you to easily package, distribute, and run applications in a lightweight, portable environment. It isolates applications and their dependencies into containers, self-contained environments that can be run on any machine with Docker installed.

There are several key benefits of using Docker for containerisation:

- Portability: Docker containers can run on any platform, making deploying applications across different environments accessible without worrying about dependencies or compatibility issues.

- Efficiency: Docker uses a layered file system and shared resources, allowing for efficient use of system resources. It also enables faster deployment and scaling of applications.

- Isolation: Docker provides process-level isolation, ensuring that applications running in containers and the host system are isolated from each other. This enhances security and reduces the risk of conflicts between applications.

- Reproducibility: Docker enables you to define the environment and dependencies for your application in a Dockerfile, making it easy to reproduce the same environment in different stages of the software development lifecycle.

By leveraging Docker, software development teams can simplify the process of building and deploying applications, leading to improved productivity, scalability, and reliability. The following section will explore everyday use cases for Docker in modern software development.

Exploring the Use Cases for Docker in Modern Software Development

Docker has become an essential tool in modern software development due to its versatility and efficiency. Let’s explore some everyday use cases for Docker and how it streamlines the software development process.

Containerisation for Development Environments

Docker provides developers with the ability to create isolated and reproducible development environments. Developers can package all the necessary application dependencies and configurations using Docker images. This ensures that the development environment remains consistent across different machines and eliminates the “it works on my machine” problem. With Docker, developers can easily share and distribute their development environments, making collaborative development much more efficient.

Simplifying Software Testing

Docker simplifies software testing by providing a consistent environment to run tests. By containerising the test environment, developers can ensure that tests run in the same conditions regardless of the underlying host system. This makes it easier to write tests that are reliable and reproducible. Additionally, Docker’s ability to create and destroy containers quickly allows for parallel testing, speeding up the overall testing process.

Streamlining Continuous Integration and Deployment

Docker is crucial in continuous integration and deployment (CI/CD) pipelines. By containerising applications, developers can easily package and deploy their software across different environments, from development to production. Docker images can be built and pushed to container registries, making deploying new application versions simple. Docker also facilitates blue-green deployment and canary releases by allowing easy switching between container versions, reducing downtime and risk during deployment.

Scaling and Load Balancing

Docker’s containerisation capabilities make scaling applications a breeze. By running multiple container instances, applications can be mounted horizontally to handle increased traffic or workload. Docker’s support for container orchestration tools like Docker Swarm or Kubernetes further simplifies scaling by automatically managing container distribution across multiple hosts. Additionally, Docker’s load balancing features ensure that traffic is distributed evenly among containers, optimising resource utilisation and improving application performance.

Docker’s use cases in modern software development are vast and varied. From streamlining development environments to efficient testing and deployment, Docker has revolutionised the software development process, making it more efficient, reliable, and scalable.

Docker vs. Podman: A Comparison of Containerization Tools

Regarding containerisation tools, Docker and Podman are two popular options. While they serve a similar purpose, the two have fundamental differences. This section will compare Docker and Podman regarding their features, performance, and security.

Critical Differences Between Docker and Podman

Docker and Podman have several differences that distinguish them from each other. Some of the key differences include:

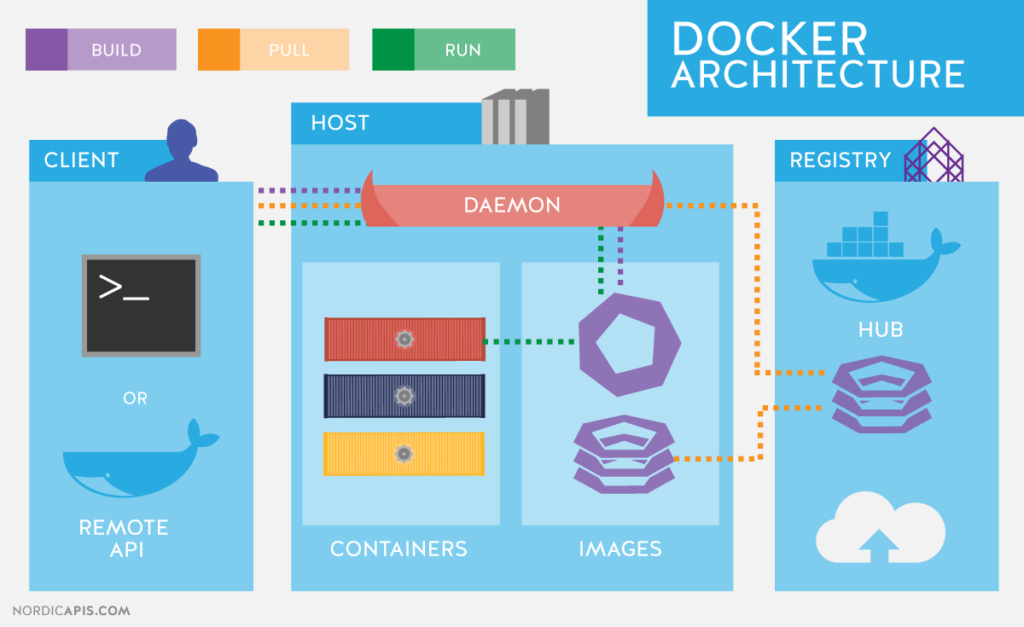

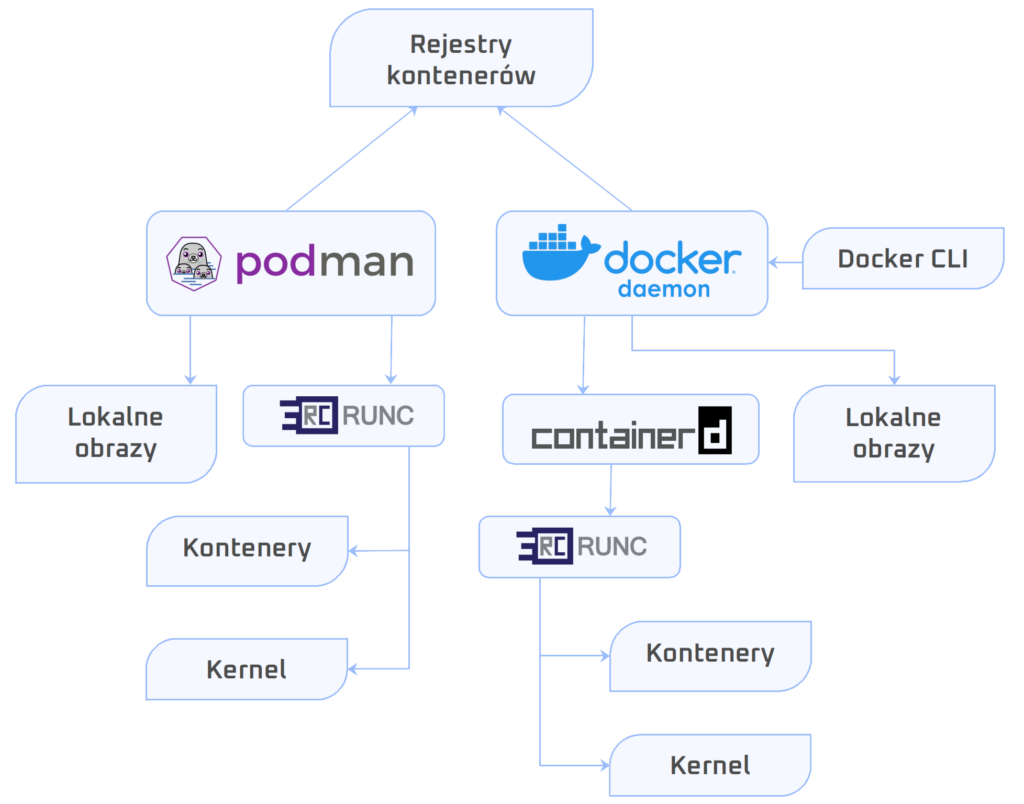

- Architecture: Docker uses a client-server architecture, where the Docker daemon manages containers. On the other hand, Podman is daemonless and relies on the user’s permissions to manage containers.

- Rootless Containers: While Docker requires root privileges to run containers, Podman allows users to run containers as regular users without sacrificing security.

- Image Management: Docker has a built-in registry for managing images, while Podman relies on external registries for image management.

- Deployment: Docker includes Docker Compose for managing multi-container applications, while Podman relies on tools like Podman Pod and Podman Compose for similar functionalities.

Performance and Security Comparison

In terms of performance and security, Docker and Podman also have some differences:

- Performance: Docker is known for its maturity and widespread adoption, often resulting in better community support and a more comprehensive range of available tools. Podman, being relatively newer, is catching up in performance improvements.

- Security: Both Docker and Podman prioritise container security. However, Podman’s rootless containers and tighter integration with Linux namespaces and groups provide an added layer of protection.

Choosing Between Docker and Podman

When choosing between Docker and Podman, several factors should be considered:

- Use Case: Evaluate your requirements and determine which tool aligns better with your use case.

- Compatibility: Consider your environment’s existing infrastructure, tools, and ecosystems and choose the device that integrates seamlessly.

- Experience and Support: Assess your team’s knowledge and experience with Docker or Podman and the availability of community support and resources for each tool.

Ultimately, the decision between Docker and Podman depends on your specific needs and preferences. Both powerful containerisation tools can facilitate efficient software development and deployment processes.

Getting Started with Docker: Installation and Setup

Installing Docker

To start using Docker, you need to install it on your machine. Follow these steps to install Docker:

- Ensure that your operating system meets the prerequisites for running Docker.

- Visit the Docker website and download the appropriate Docker package for your operating system.

- Run the Docker installer and follow the instructions provided.

- After the installation is complete, verify that Docker is correctly installed by opening a terminal or command prompt and running the command:

docker –version

Configuring Docker for Different Operating Systems

Docker can be configured differently depending on your operating system. Here are some resources to help you configure Docker for various operating systems:

- Windows: Refer to the Docker documentation for instructions on configuring Docker for Windows.

- macOS: Visit the Docker website and follow the instructions on configuring Docker for macOS.

- Linux: Check the official Docker documentation for guidance on configuring Docker for different Linux distributions.

Prerequisites for Running Docker

Before running Docker on your machine, ensure that you meet the following prerequisites:

- Your machine should have enough resources (CPU, memory, storage) to run Docker containers.

- Ensure that virtualisation is enabled in your BIOS settings.

- If you are using Linux, ensure the kernel modules are installed.

Diving Into Docker: A Step-by-Step Guide to Containerizing Applications

Containerisation with Docker is a powerful tool that allows developers to package and distribute applications efficiently. In this section, we will walk through the step-by-step process of containerising an application using Docker.

Step 1: Installing Docker

The first step is to install Docker on your machine. You can download and install Docker from the official Docker website. Follow the instructions specific to your operating system.

Step 2: Building a Dockerfile

A Dockerfile is a text file that contains all the instructions needed to build a Docker image. Start by creating a new ” Dockerfile ” file in your project directory. Within this file, you will define the base image, copy the necessary files, and specify any dependencies or configurations.

Step 3: Building the Docker Image

Once you have defined the Dockerfile, you can build the Docker image by running the “docker build” command. This command will read the instructions in the Dockerfile and create a new image based on those instructions.

Step 4: Running the Docker Container

After successfully building the Docker image, you can run a Docker container based on that image. Use the “docker run” command to start a new container, specifying any necessary options or configurations.

Step 5: Testing and Debugging

With the Docker container running, you can test your application within the container environment. Use the appropriate tools and techniques to debug any issues that may arise.

Step 6: Distributing the Docker Image

Once satisfied with your containerised application, you can distribute the Docker image to other environments or users. This can be done by pushing the idea to a Docker registry or sharing the image file directly.

Step 7: Updating and Versioning

As your application evolves, you may need to update the Docker image. By following best practices for versioning, you can ensure that different versions of your application can be deployed and managed effectively.

By following these steps, you can harness the power of Docker to containerise your applications, making them more portable, scalable, and efficient.

Optimising Docker Performance: Tips and Best Practices

When it comes to optimising Docker performance, there are several tips and best practices that can help you achieve optimal results. Here are some key considerations:

1. Use Lightweight Base Images

Choosing lightweight base images for your Docker containers can significantly improve performance. These images are smaller and have fewer components, resulting in faster startup times and reduced resource consumption.

2. Minimize Layers

Reducing the number of layers in your Docker image can improve performance. Each layer adds overhead, so consolidating them as much as possible can help streamline the container startup process.

3. Optimize Resource Allocation

It’s essential to allocate the right resources to your Docker containers. This includes CPU, memory, and disk space. Balancing these resources ensures optimal performance and prevents resource contention issues.

4. Utilize Caching

Docker provides caching mechanisms that can speed up builds and deployments. By intelligently leveraging Docker’s cache, you can avoid unnecessary reprocessing of steps and reduce build times.

5. Enable Docker Swarm Mode

If you’re using Docker Swarm for container orchestration, enabling Swarm mode can help enhance performance. Swarm mode allows for distributed deployments and load balancing, improving scalability and resilience.

6. Monitor and Optimize

Regularly monitor your Docker containers and their performance metrics. By identifying bottlenecks and areas of improvement, you can optimise your containers for better performance. Utilise monitoring tools like Docker Stats and cAdvisor for insights into resource utilisation and container health.

7. Network Optimization

Optimising your Docker network configuration can have a significant impact on performance. Consider using overlay networks for container communication and implementing load-balancing techniques to distribute network traffic efficiently.

8. Container Restart Policies

Configure appropriate restart policies for your containers. By defining restart parameters, you can ensure that containers are automatically restarted in case of failures or crashes, minimising downtime and improving overall availability.

By implementing these tips and best practices, you can optimise the performance of your Docker containers and ensure smooth and efficient operations in your environment.

Managing Containers with Docker: Essentials for Efficient Deployment

Efficiently managing and deploying containers is essential for maximising the benefits of Docker. Here are some critical essentials for managing Docker containers:

1. Container Management with Docker

Docker provides a range of commands and tools for managing containers effectively. Some essential Docker commands for container management include:

- Docker run: Created and started a new container from an image.

- Docker stop: Stops a running container.

- Docker restart: Restarts a container.

- Docker rm: Removes a container.

- Docker ps: Lists the running containers.

- Docker exec: Runs a command in a running container.

2. Efficient Deployment with Docker

Efficiently deploying containers with Docker involves various considerations. Some of these include:

- Optimizing Resource Utilization: Properly configuring container resource allocation helps maximise performance and avoid resource wastage.

- Continuous Integration and Deployment: Integrating Docker into CI/CD workflows enables rapid and automated deployment of applications.

- Infrastructure Scaling: Docker’s ability to scale containers horizontally allows for efficiently managing increased traffic and workload.

- Monitoring and Logging: Implementing monitoring and logging strategies helps detect issues and track container performance.

3. Challenges and Solutions for Scaling Docker Container Deployments

Scaling Docker container deployments presents unique challenges. Some common challenges include:

- Networking and Service Discovery: Properly managing container networking and implementing service discovery mechanisms are crucial for seamless scaling.

- Load Balancing: Implementing load balancing strategies helps distribute traffic evenly across containers.

- Storage Management: Efficiently managing storage volumes and implementing scalable storage solutions is essential for containerised applications.

By understanding and effectively managing Docker containers, organisations can ensure efficient deployment and maximise the benefits of containerisation in their software development and deployment processes.

Securing Docker Containers: Best Practices and Tools

When it comes to containerisation, security is of utmost importance. Here are some best practices and tools to secure your Docker containers:

1. Update and Patch Regularly

Keep your Docker installation and containers up to date by regularly applying security patches and updates. This helps protect against known vulnerabilities.

2. Use Official Images

Opt for official images from trusted sources when selecting base images for your containers. These images are regularly maintained and updated, reducing the risk of using insecure or compromised images.

3. Limit Privileges

Minimise the privileges given to container processes. Use the principle of least privilege to ensure that containers only have the necessary permissions to perform their intended tasks.

4. Implement Access Control and Authentication

Control access to your Docker environment by implementing robust authentication mechanisms. Utilise features such as user namespaces, role-based access control (RBAC), and strong password policies to protect against unauthorised access.

5. Use Container Isolation

Use Docker’s built-in isolation features, such as container namespaces and groups, to prevent containers from interacting with or affecting each other. This helps contain potential security breaches.

6. Enable Resource Constraints

Set resource limits for containers to prevent them from consuming excessive resources and potentially disrupting other containers or the host system. This helps ensure the stability and availability of your Docker environment.

7. Monitor Containers and Logs

Implement container monitoring and centralised logging to gain visibility into container activities and detect suspicious or malicious behaviour. Regularly review and analyse logs to identify potential security incidents.

8. Scan for Vulnerabilities

Regularly scan your Docker images and containers for known vulnerabilities using vulnerability scanning tools like Docker Security Scanning, Anchore, or Clair. Address identified vulnerabilities promptly.

9. Employ Network Segmentation

Segregate your Docker network into different segments or subnets to limit container communication. This reduces the potential impact of a security breach on other containers or the host network.

10. Consider Runtime Defense Tools

Explore using runtime defence tools like Falco, Sysdig, or AppArmor to monitor and protect containers in real-time. These tools provide additional layers of security against threats.

By following these best practices and utilising security tools, you can enhance the security posture of your Docker containers and protect your applications and data from potential threats.

Orchestrating Containers with Docker: Exploring Docker Swarm and Kubernetes

Container orchestration is crucial in managing and deploying containers efficiently in a Docker environment. Docker provides two popular options for container orchestration: Docker Swarm and Kubernetes.

Docker Swarm

Docker Swarm is a native clustering and orchestration solution provided by Docker. It allows users to create and manage a cluster of Docker nodes, forming a Docker Swarm. In a Swarm, containers can be scheduled and distributed across multiple nodes, ensuring high availability and scalability.

Critical features of Docker Swarm include:

- Simple setup and configuration

- Automatic load balancing and service discovery

- Horizontal scaling of services

- Rolling updates and zero-downtime deployments

- Secure communication between nodes

Docker Swarm is an excellent choice for users already familiar with Docker and who prefer a more straightforward, lightweight solution for container orchestration.

Kubernetes

Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform initially developed by Google. It provides a highly scalable and flexible environment for managing containerised applications across a cluster of nodes.

Here are some key features and benefits of Kubernetes:

- Automatic scaling and load balancing

- Automatic rollout and rollback of deployments

- Advanced scheduling and resource management

- Self-healing capabilities with auto-restart and replication

- Service discovery and external networking

Kubernetes is known for its robustness, scalability, and extensive ecosystem of tools and plugins. It is a popular choice for organisations with complex containerised applications and a need for advanced orchestration capabilities.

Choosing between Docker Swarm and Kubernetes depends on the specific requirements of your project and the level of complexity you are comfortable with. Both options offer powerful features for container orchestration, and evaluating your needs and preferences is recommended before making a decision.

Docker Beyond Development: Deploying Containers in Production Environments

When deploying Docker containers in production environments, several considerations must be remembered. Here are some key points to address:

Considerations for Deployment

1. Scalability: Ensure your infrastructure can handle the scale and demand of production workloads. Design your deployment to be horizontally scalable, allowing you to add or remove containers as needed.

2. High Availability: Implement strategies to minimise downtime and ensure continuous availability. Utilise load balancing and fault tolerance techniques to ensure your containers are always accessible.

3. Resource Management: Optimize resource allocation, such as CPU, memory, and storage, to maximise efficiency and avoid bottlenecks. Use container orchestration tools to manage resources and distribute workloads effectively.

Integration with Existing Systems and Workflows

1. Networking: Ensure that Docker containers can communicate with other components of your production system. Set up network configurations, such as overlay or virtual private networks, to enable seamless communication.

2. Data Management: Plan for data persistence and storage requirements. Consider using external storage solutions or volume mounts to ensure containers can access data properly.

3. Automation and CI/CD: Integrate Docker deployments into your continuous integration and deployment (CI/CD) workflows. Automate the build, testing, and deployment processes using tools like Jenkins or GitLab CI.

Best Practices for High Availability and Reliability

1. Load Balancing: Implement load balancers to distribute traffic across multiple containers and ensure optimal performance. This helps prevent overloading individual containers and provides redundancy.

2. Monitoring and Logging: Use monitoring tools to keep track of the health and performance of your Docker containers. Set up logging to capture and analyse logs, aiding in troubleshooting and performance optimisation.

3. Disaster Recovery: Have a comprehensive backup and recovery strategy. Regularly back up your container configurations and data to minimise the impact of any potential failures.

By considering these factors and following best practices, you can ensure a smooth and successful deployment of Docker containers in production environments.

Conclusion

In conclusion, Docker is a powerful tool for containerisation that offers a wide range of benefits for software development. Docker streamlines the development process and promotes consistency across different environments by allowing applications to be packaged and distributed as portable containers. It also provides advantages for testing and deployment, enabling faster and more efficient software delivery.

When comparing Docker with Podman, it is essential to consider factors such as performance and security. While both tools offer similar functionalities, Docker has a larger user community and a more mature ecosystem. However, Podman may be a viable alternative for those seeking a more lightweight containerisation solution.

Getting started with Docker involves installing and configuring the tool for your operating system. Once set up, containerising applications with Docker is a relatively straightforward process that offers flexibility and scalability. Following best practices to optimise container performance and ensure efficient deployment is crucial.

Securing Docker containers is essential, and Docker provides various security features. Additionally, using recommended security tools can further enhance container security. Container orchestration with Docker Swarm or Kubernetes is valuable for managing and scaling container deployments, depending on your specific needs.

Deploying Docker containers in a production environment requires careful consideration and integration with existing systems and workflows. Following best practices for high availability and reliability ensures smooth operations.

In summary, Docker is a versatile tool that empowers developers to unlock the power of containerisation. Software development teams can maximise Docker’s benefits and accelerate their development processes by understanding its basics, exploring its use cases, and following best practices.