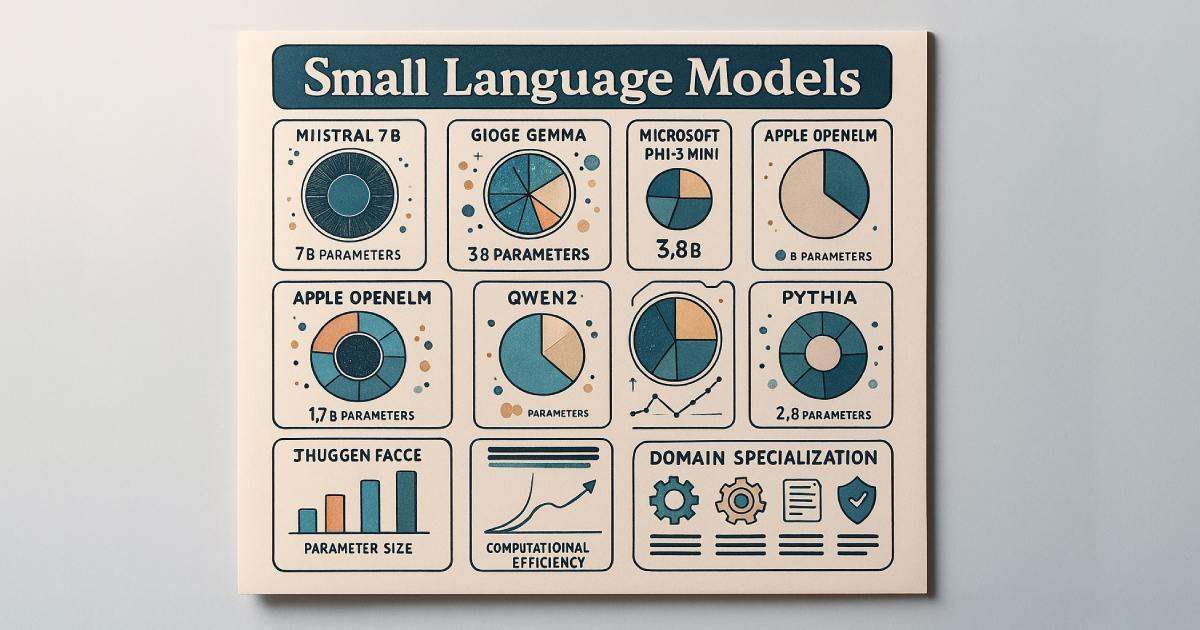

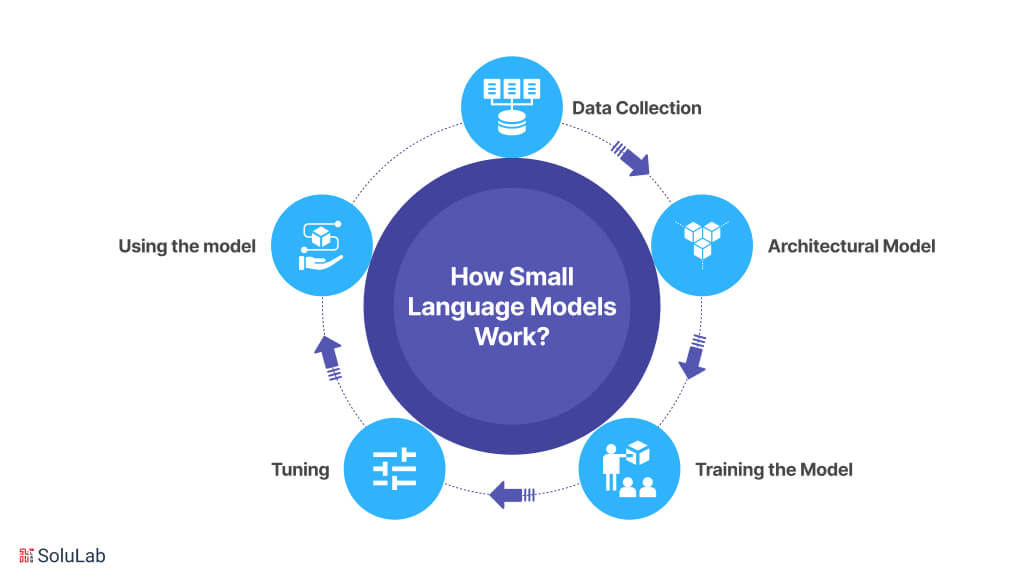

Overview of Small Language Models

Definition of Small Language Models

Small language models are AI that perform natural language processing using fewer parameters and less computational power than larger models. Typically, they consist of fewer layers and dimensions, allowing for quicker training and inference times. This makes small language models particularly attractive for deployment in resource-constrained environments.

To clarify, small language models can be defined by the following characteristics:

- Fewer Parameters: Typically under 100 million parameters, allowing for reduced processing time.

- Limited Computational Power: Designed to function on devices with less processing power, such as mobile phones or edge devices.

- Task-Specific Optimization: Often tailored to specific applications, enhancing performance on those tasks.

Applications of Small Language Models

The practical applications of small language models are vast and varied, demonstrating their importance in today’s AI landscape. Here are some common use cases:

- Chatbots: Many customer service platforms utilize small language models to provide real-time responses to user queries, enhancing customer interaction.

- Text Classification: They are frequently employed for tasks such as spam detection or sentiment analysis, enabling businesses to sift through large volumes of text efficiently.

- Personal Assistants: Small language models often power the natural language understanding capabilities of virtual assistants, making them more intuitive.

- Language Translation: While not as powerful as larger models, they can provide satisfactory translations in specific contexts for users needing quick language conversion.

Small language models are crucial for making AI efficient and accessible across various platforms and devices, showing their importance in daily and specialized applications.

Evolution of Language Models

Historical Development

The evolution of language models reflects the progress in computing power and algorithms over the years. Early language models relied heavily on statistical approaches, analyzing n-grams—sets of n words or phrases—to understand language patterns. This method, while foundational, had limitations in understanding context and semantics.

As computational capabilities evolved, researchers began to explore more complex structures, leading to:

- Rule-Based Systems: The emergence of manual rule-systems in the 1980s allowed for more sophisticated language processing but often fell prey to rigidity and required extensive human intervention.

- End of the 20th Century: Statistical methods became the norm, allowing for more statistical machine translations and the basic foundations of today’s models.

Each of these stages built upon the last, setting the groundwork for the rapid developments we see today.

Advancements in Modern Language Models

The introduction of deep learning revolutionized the landscape of language modeling. Instead of relying solely on manual feature engineering, modern models leverage neural networks to learn language patterns autonomously.

Prominent advancements include:

- Transformer Architecture: Introduced in 2017, this architecture dramatically improved the way models understand context by allowing for parallel processing of words and phrases.

- Transfer Learning: Techniques like fine-tuning pre-trained models enable smaller datasets to yield substantial performance gains, making them more accessible.

This evolution has produced high-performance models like BERT and GPT, establishing new standards in natural language processing tasks. These advancements enable more natural and intuitive interaction with AI, bridging the gap between human language and machine understanding. Reflecting on this journey, it’s clear that every step has greatly enhanced the abilities we now rely on.

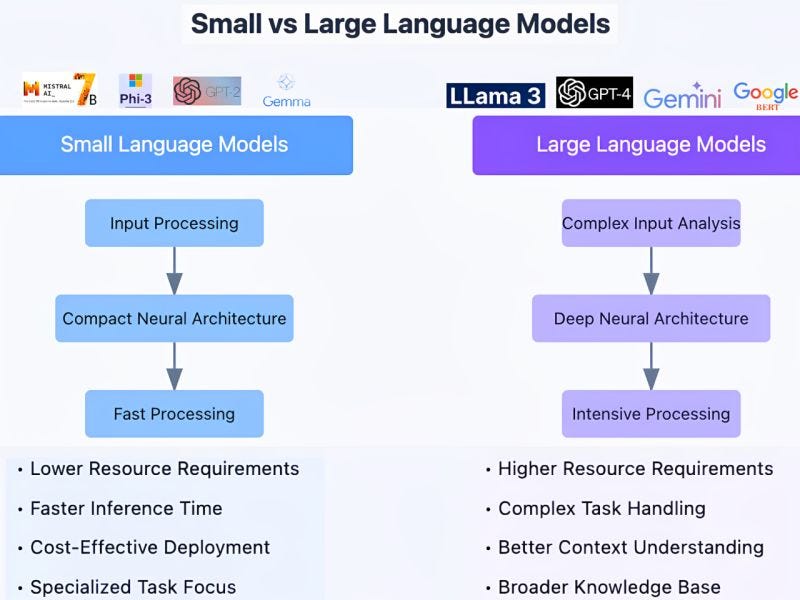

Differences Between Small and Large Language Models

Size and Complexity

As the conversation about language models continues, one cannot overlook the stark differences between small and large language models. At their core, size and complexity are the primary distinguishing factors.

Small language models typically range from a few million to under 100 million parameters, making them lightweight and agile. This versatility means they can operate efficiently on devices with limited computational resources, such as smartphones or IoT devices.

In contrast, large language models boast billions of parameters, enabling them to capture intricate language patterns and context. This leads to:

- Higher Complexity: Larger models can represent relationships and nuances in language effectively but require more complex training processes.

- Increased Size: The sheer size of larger models means they demand substantial computational power for both training and inference, often relying on powerful hardware or cloud computing.

Performance and Capabilities

The distinctions become even more noticeable in their performance and ability. Although small models are efficient, they frequently encounter difficulties when it comes to handling nuanced tasks or comprehending longer texts. They are good for specific tasks like simple chatbots or text classification but may struggle with more complex interactions.

On the other hand, large language models demonstrate remarkable capabilities, including:

- Context Understanding: Their vast training datasets and complexity allow for more in-depth comprehension of context, making them effective for versatile tasks like content generation and summarization.

- Generalization: With billions of parameters, they can generalize learnings across various domains, providing richer interactions and nuanced responses.

Small language models are helpful for specific tasks, but large models improve possibilities in natural language processing and enhance human-AI interactions. This distinction shapes the way industries leverage these technologies to meet their unique needs.

Challenges and Limitations

Data Efficiency

While the advancements in language models have been remarkable, they do not come without their challenges. A major challenge we face is achieving data efficiency. Small language models often require a substantial amount of high-quality data to achieve practical performance. In familiar situations, like training a chatbot, using a limited dataset can lead to unsatisfactory results.

- Data Sparsity: Small models can struggle to generalize if the training data does not adequately cover the language’s diversity and intricacies.

- Quality Over Quantity: It’s not just about having more data; the quality of data plays a critical role. Poor-quality data can mislead training outcomes and reduce the effectiveness of a model.

Conversely, large models, while capable of synthesizing knowledge from vast datasets, also face data efficiency challenges. They may often be overfitted to specific dominions if focused data is unbalanced.

Computational Resource Requirements

The computational resource demands for both small and large language models present another barrier. Small models, despite their efficiency, can still be limited in scope if hardware constraints restrict their training capabilities.

On the flip side, large language models require significant computational resources, which often translates to:

- High Energy Consumption: Training and deploying large models can lead to substantial energy usage, raising concerns about their environmental impact.

- Cost Implications: Powerful GPUs and extensive cloud computing services can become financially prohibitive, particularly for smaller organizations or startups looking to incorporate cutting-edge technology.

Navigating these challenges demands a careful balance. Organizations should plan according to their resources, data quality, and model capabilities. This nuanced understanding of data efficiency and computational requirements is vital for leveraging language models effectively in various applications.

Ethical Considerations

Bias and Fairness

As the use of language models expands, it brings ethical considerations to the forefront, particularly concerning bias and fairness. Training data includes societal biases, which language models can then replicate or worsen in their outputs.

Imagine developing an AI for hiring processes that inadvertently favors one demographic over others due to biased training data. This could lead to unfair practices and discrimination that impact lives and careers.

Key points to consider include:

- Training Data Representation: If a dataset is skewed—in terms of gender, race, or socioeconomic status—the model will likely produce skewed results.

- Mitigation Strategies: Employing techniques such as fairness-aware modeling and ongoing bias audits is crucial to creating equitable AI applications.

Ensuring fairness in AI is a moral duty that requires effort from both developers and stakeholders.

Privacy and Security Concerns

Another pressing ethical concern revolves around privacy and security. As language models become increasingly capable of processing vast amounts of personal data, ensuring data protection is paramount.

Consider the scenario of a language model used in customer service that processes sensitive information. If strong privacy measures are not implemented, this data can become vulnerable.

Several considerations emerge in this space:

- Data Anonymization: To protect individual privacy, it’s essential to anonymize sensitive data during training. This minimizes risks if the model’s outputs could inadvertently reveal personal information.

- Regulatory Compliance: Adhering to laws like GDPR (General Data Protection Regulation) is critical in today’s digital landscape, requiring organizations to be transparent about how data is used.

In conclusion, addressing biases and ensuring privacy are essential for building ethical language models. Developers should prioritize fairness and privacy in their work, ensuring that technology benefits society positively and equitably. This ethical foundation not only enhances public trust but ultimately fosters a more equitable digital landscape.

Future Trends in Small Language Models

Research Directions

Researchers are exploring natural language processing, revealing promising potential for small language models. Many cutting-edge research directions aim to enhance their capabilities while maintaining efficiency. Some promising trends include:

- Few-Shot Learning: This approach allows models to perform tasks with very few examples, making them adaptable and reducing the need for large datasets.

- Hybrid Models: Combining the strengths of both small and large language models could lead to more versatile applications, maximizing efficiency without sacrificing performance.

- Explainability: With increasing emphasis on understanding AI decisions, researchers are exploring ways to make small models more interpretable, allowing users to understand how conclusions are drawn.

This focus on innovation will help small language models evolve, making them an indispensable part of the AI toolkit.

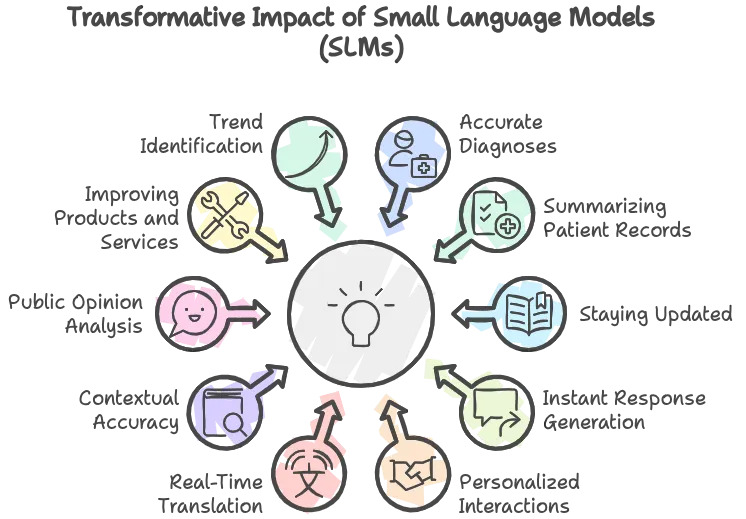

Potential Impact on Various Industries

The integration of advanced small language models can significantly reshape multiple industries. From healthcare to entertainment, the implications are vast. For instance:

- Healthcare: Small language models could help in processing medical records swiftly, summarizing patient histories, and assisting in diagnosis through natural language queries.

- Finance: In the banking sector, these models can streamline customer service interactions, providing immediate responses to routine inquiries while analyzing trends from financial communications.

- Education: Personalized tutoring applications can benefit from small language models, adapting to individual learning styles and offering tailored support based on each student’s needs.

These advancements can lead to more efficient operations, improved customer experiences, and greater accessibility of information across sectors. Ongoing research in small language models is expected to boost productivity and provide innovative solutions for industry-specific challenges. Embracing these trends will certainly position organizations to thrive in an increasingly AI-driven world.

For More Information

Check out my AI-generated podcast here: